diff --git "a/02.\347\274\226\345\206\231\350\207\252\345\256\232\344\271\211Annotation.md" "b/02.\347\274\226\345\206\231\350\207\252\345\256\232\344\271\211Annotation.md"

index fbe3f55..06afcd1 100644

--- "a/02.\347\274\226\345\206\231\350\207\252\345\256\232\344\271\211Annotation.md"

+++ "b/02.\347\274\226\345\206\231\350\207\252\345\256\232\344\271\211Annotation.md"

@@ -18,7 +18,7 @@ public @interface TestCase { // 使用@Interface修饰这个是一个Annotation

以上就完成了一个Annotation接口的编写。接着我们写一个Test类来使用@TestCase这个注释。

```java

public class Test {

- @TestCase

+

public void m_1(){

System.out.println("This is Method with @TestCase!");

}

diff --git "a/03.\350\233\213\347\226\274\347\232\204AOP\346\246\202\345\277\265.md" "b/03.\350\233\213\347\226\274\347\232\204AOP\346\246\202\345\277\265.md"

index 94c614f..6e24207 100644

--- "a/03.\350\233\213\347\226\274\347\232\204AOP\346\246\202\345\277\265.md"

+++ "b/03.\350\233\213\347\226\274\347\232\204AOP\346\246\202\345\277\265.md"

@@ -1,6 +1,6 @@

#AOP介绍

-AOP全称Aspect Oriented Programming,面向切面编程。在事务管理,代码跟踪方面应用很广泛。教科书上的对于AOP概念的解释比较让人晕眩,下面是偶本人对AOP一些概念的理解:

+AOP全称Aspect Oriented Programming,面向切面编程。在事务管理,代码跟踪方面应用很广泛。教科书上的对于AOP概念的解释比较让人晕眩。

>**连接点(Joinpoint)**:程序流中可以用于代码植入的点,通常是某些边界,例如:类初始化前、类初始化后、方法执行前、方法执行后、方法抛异常后。

diff --git "a/05.\350\243\205\351\245\260\345\231\250\346\250\241\345\274\217.md" "b/05.\350\243\205\351\245\260\345\231\250\346\250\241\345\274\217.md"

index 4876258..78fc517 100644

--- "a/05.\350\243\205\351\245\260\345\231\250\346\250\241\345\274\217.md"

+++ "b/05.\350\243\205\351\245\260\345\231\250\346\250\241\345\274\217.md"

@@ -2,9 +2,6 @@

## 遇到的问题

- 学习的最好方法就是实践,想当年大学学《设计模式》这门课的时候,重修两次,补考还挂了两次,最后还是甩小聪明才拿到的毕业证。

-往事不多提,甚是可悲。

-

最近遇到一个问题,这里有一个controller的方法,方法里面需要获取request header的 __x-forwarded-for__ 属性的值:

```java

diff --git "a/07.\344\275\277\347\224\250socket\345\217\221\351\200\201HTTP POST\350\257\267\346\261\202.md" "b/07.\344\275\277\347\224\250socket\345\217\221\351\200\201HTTP POST\350\257\267\346\261\202.md"

index f5757b2..900f70a 100644

--- "a/07.\344\275\277\347\224\250socket\345\217\221\351\200\201HTTP POST\350\257\267\346\261\202.md"

+++ "b/07.\344\275\277\347\224\250socket\345\217\221\351\200\201HTTP POST\350\257\267\346\261\202.md"

@@ -54,16 +54,3 @@ socket.close();

```

一个HTTP POST的登陆请求就完成了。Java代码不难实现,唯一需要注意的HTTP报文的格式了。

-

-##Connection: close

-

- 细心的人,可能会发现上面的请求,控制台虽然正确地打印了服务器响应的登陆报文,但是控制台的线程是还没有断开的。

-即使客户端使用了socket.close()。

-

- 这里涉及TCP协议以及HTTP 1.1的长连接,先不详细解释。这里我们简单地使用一个Header就可以断开请求了。

-

-```java

-bw.write("Connection: close\r\n");

-```

-

- 把上面这一段代码复制到前面一段代码的Header部分即可。perfect!

diff --git "a/22.\347\274\226\347\240\201\350\247\243\347\240\201.md" "b/22.\347\274\226\347\240\201\350\247\243\347\240\201.md"

index 689d289..5281478 100644

--- "a/22.\347\274\226\347\240\201\350\247\243\347\240\201.md"

+++ "b/22.\347\274\226\347\240\201\350\247\243\347\240\201.md"

@@ -9,7 +9,7 @@

```Java

System.out.println("中国");

```

-1. 无论文件以任何编码格式载入到JVM中,"中国"二字都会以unicode码的形式存在JVM中(\u4e2d\u56fd);

+1. 无论文本以任何编码格式载入到JVM中,"中国"二字都会以unicode码的形式存在JVM中(\u4e2d\u56fd);

2. 假如IDE控制台的编码为UTF-8,当对"中国"二字进行控制台输出时,系统会将unicode码转为具体的UTF-8编码格式。(\u4e2d\u56fd -> e4b8ade59bbd)

```

@@ -77,4 +77,4 @@ public void gbk_unicode_utf8() throws DecoderException,

实际上,这里的unicode编码对应的是unicode的`UTF-16`编码。以16位定长的二进制表示一个unicode码。

-## 参考资料

\ No newline at end of file

+## 参考资料

diff --git "a/27.Java\345\212\240\345\257\206\350\247\243\345\257\206\344\271\213\345\257\271\347\247\260\345\212\240\345\257\206\347\256\227\346\263\225.md" "b/27.Java\345\212\240\345\257\206\350\247\243\345\257\206\344\271\213\345\257\271\347\247\260\345\212\240\345\257\206\347\256\227\346\263\225.md"

index 567e86e..caaaded 100644

--- "a/27.Java\345\212\240\345\257\206\350\247\243\345\257\206\344\271\213\345\257\271\347\247\260\345\212\240\345\257\206\347\256\227\346\263\225.md"

+++ "b/27.Java\345\212\240\345\257\206\350\247\243\345\257\206\344\271\213\345\257\271\347\247\260\345\212\240\345\257\206\347\256\227\346\263\225.md"

@@ -6,7 +6,7 @@

**对称加密算法的安全基于密钥的保密,所以密钥的安全与否决定了加密信息的安全!**

-虽然说**对称加密算法**不适用于安全要求较高的场合,对是对于一般应用来说,这已经足够了。与此同时,对称加密的速度远由于非对称加密,所以还是很多场景适用对称加密。

+虽然说**对称加密算法**不适用于安全要求较高的场合,对是对于一般应用来说,这已经足够了。与此同时,对称加密的速度远低于非对称加密,所以还是很多场景适用对称加密。

对称加密的速度比公钥加密快很多,在很多场合都需要对称加密。

@@ -36,7 +36,7 @@ IDEA(International Data Encryption Algorithm,国际数据加密标准)是

在加密之前,我们先要生成加密算法对应的密钥。我们之前所说的密钥长度都是基于位元为单位的。例如128位的密钥,则该密钥占用128个位元,以一个字节八位元,128密钥占用128 / 8 = 16个字节。使用byte[]数组存放,则byte[]的长度为16。

-为了方便存储和传输,我们通常使用Base64对密钥的字节组数进行编码。而ASCII编码有很多字符是不可打印的,所以不建议使用ASCII。当然你可以使用ASCII,当时很容易在存储或传输过程中丢失字节(在计算器中存储和传输没有问题,但是使用纸质就歇菜了)。当然你可以使用其他方式和编码,一般情况下都是使用Base64,约定俗成。

+为了方便存储和传输,我们通常使用Base64对密钥的字节组数进行编码。而ASCII编码有很多字符是不可打印的,所以不建议使用ASCII。当然你可以使用ASCII,但是很容易在存储或传输过程中丢失字节(在计算器中存储和传输没有问题,但是使用纸质就歇菜了)。当然你可以使用其他方式和编码,一般情况下都是使用Base64,约定俗成。

Java中使用`KeyGenerator`类来生成密钥。如下:

@@ -58,7 +58,7 @@ public void generateKey() throws NoSuchAlgorithmException {

Cipher是JCA中用于加密解密的类,它同时负责数据的加密与解密。在初始化时,需要为Cipher指定是加密或是解密模式。

-加密与加密的过程直接操作的是数据的字节数组,由于字符在JVM中是以unicode形式存在的,字符串的不同编码的字节数组序列是一样的,例如UTF-8和GBK的字节序列就不一样。所以在加密之前或加密之后需要将字符编码进行编码与解码。当然,这不是必须的,你可以使用系统默认的ASCII字符编码,只要统一即可。

+加密与加密的过程直接操作的是数据的字节数组,由于字符在JVM中是以unicode形式存在的,字符串的不同编码的字节数组序列是不一样的,例如UTF-8和GBK的字节序列就不一样。所以在加密之前或加密之后需要将字符编码进行编码与解码。当然,这不是必须的,你可以使用系统默认的ASCII字符编码,只要统一即可。

数据加密后是以字节数组存在的,跟密钥类似,为了方面存储和传输,我们将加密后的结果转为Base64表示形式。

@@ -111,4 +111,4 @@ public void decrypt() throws InvalidKeyException, NoSuchAlgorithmException, NoSu

* 密码学:http://zh.wikipedia.org/wiki/%E5%AF%86%E7%A2%BC%E5%AD%B8

* 经典密码:http://zh.wikipedia.org/wiki/%E7%B6%93%E5%85%B8%E5%AF%86%E7%A2%BC

* DES:http://zh.wikipedia.org/wiki/%E8%B3%87%E6%96%99%E5%8A%A0%E5%AF%86%E6%A8%99%E6%BA%96

-* 3DES:http://zh.wikipedia.org/wiki/3DES

\ No newline at end of file

+* 3DES:http://zh.wikipedia.org/wiki/3DES

diff --git "a/28.Java\345\212\240\345\257\206\350\247\243\345\257\206\344\271\213\351\235\236\345\257\271\347\247\260\345\212\240\345\257\206\347\256\227\346\263\225.md" "b/28.Java\345\212\240\345\257\206\350\247\243\345\257\206\344\271\213\351\235\236\345\257\271\347\247\260\345\212\240\345\257\206\347\256\227\346\263\225.md"

index 025fe05..0398372 100644

--- "a/28.Java\345\212\240\345\257\206\350\247\243\345\257\206\344\271\213\351\235\236\345\257\271\347\247\260\345\212\240\345\257\206\347\256\227\346\263\225.md"

+++ "b/28.Java\345\212\240\345\257\206\350\247\243\345\257\206\344\271\213\351\235\236\345\257\271\347\247\260\345\212\240\345\257\206\347\256\227\346\263\225.md"

@@ -10,7 +10,7 @@

###28.2.1 典型非对称加密算法:RSA

-RSA算法密钥的长度为512位至65535位之间,且必须是64的倍数。Java6默认的RSA算法的密钥长度是1014位。

+RSA算法密钥的长度为512位至65535位之间,且必须是64的倍数。Java6默认的RSA算法的密钥长度是1024位。

###28.2.2 常用非对称加密算法:EIGamal

@@ -74,4 +74,4 @@ public void t6_decrypt() throws NoSuchAlgorithmException, InvalidKeySpecExceptio

}

```

-##28.4 参考资料

\ No newline at end of file

+##28.4 参考资料

diff --git a/30.Java Runtime Data Areas.md b/30.Java Runtime Data Areas.md

new file mode 100644

index 0000000..ca4875d

--- /dev/null

+++ b/30.Java Runtime Data Areas.md

@@ -0,0 +1,76 @@

+#30.Java Runtime Data Areas.md

+

+ +

+##30.1 运行时数据区(Runtime Data Areas)

+

+Java虚拟机为程序的运行提供了各种不同的数据区,部分数据区与JVM的生命周期一致(随JVM启动而分配,JVM退出而释放),而另一部分则跟线程绑定,随着线程的启动和退出进行分配和释放。

+

+###30.1.1 程序计数器(Program Counter Rigister)

+

+Java虚拟机支持多个线程在同一时刻运行。每一个Java虚拟机线程拥有自己的程序计数器。在任意时刻,任意线程都处于一个方法中(称为当前方法(current method)),如果是该方法是**非本地方法(not native method)**,则该程序计数器记录着**Java虚拟机执行的当前指令的地址**。而如果该方法为**本地方法(native method)**,则该程序计数器为undefined。

+

+###30.1.2 Java虚拟机栈(Java Virtual Machine Stacks)

+

+每个Java虚拟机线程都拥有各自的**Java虚拟机栈**,并与线程同时被创建。Java虚拟机栈用于存储**帧(frames)**。Java虚拟机栈跟传统语言的栈相似:存储局部变量、结果,同时参与方法的调用和返回。

+Because the Java Virtual Machine stack is never manipulated directly except to push and pop frames, frames may be heap allocated. The memory for a Java Virtual Machine stack does not need to be contiguous.

+

+在第一版的Java虚拟机规范中,**Java虚拟机栈**做为**Java栈** 为人们所熟知。这个规范允许Java虚拟机栈是固定的大小或根据需要通过计算来动态地扩展和收缩。如果Java虚拟机堆的大小是固定的,(If the Java Virtual Machine stacks are of a fixed size, the size of each Java Virtual Machine stack may be chosen independently when that stack is created.)

+

+Java虚拟的实现可以提供让程序员或用户来控制Java虚拟栈初始大小,并且,可以让Java虚拟机栈在最小值和最大值的限制范围内根据实际需要动态地拓展或收缩。

+

+以下异常情况与Java虚拟栈相关:

+* 如果线程需要的Java虚拟机栈大小超过限额,则抛出**StackOverflowError**。

+* 如果Java虚拟机栈被设置为可动态拓展,当拓展时由于受限于物理内存的限制而无法实现拓展时,则抛出**OutOfMemoryError**。

+

+###30.1.2 堆(Heap)

+

+Java虚拟机具有一个由所有Java虚拟机线程共享的一个堆。堆是**分配和储存所有的实例对象和数组的运行时数据区域**。

+

+堆是在Java虚拟机启动时被创建的。堆的对象储存空间通过**自动存储管理系统(garbage collector简称gc)**进行回收,并不能像c/c++语言那样显示地进行释放。Java虚拟机没有指定限制使用的gc,这个可以由程序员和用户根据自己的情况来进行选择。堆可以是固定大小的,也可以通过计算来动态地拓展和收缩。同时,堆的内容空间地址不需要是连续的。

+

+Java虚拟机的实现可以提供让程序员和用户控制初始的堆大小,并且,可以让栈在最小值和最大值的限制范围内根据实际需要动态地拓展或收缩。

+

+以下异常情况与堆相关:

+

+* 当堆需要拓展的内存大小大于自动存储管理系统所能提供的内存大小时,抛出**OutOfMemoryError**。

+

+### 方法区(Method Area)

+

+Java虚拟机拥有一个由所有Java虚拟机线程共享的方法区。方法区类似于传统语言的用于存储编译代码的内存区域。(The method area is analogous to the storage area for compiled code of a conventional language or analogous to the "text" segment in an operating system process. )它用于存储每个类的类结构,例如**运行时常量池(Runtime Constant Pool)**,字段和方法数据,以及方法和构造方法的代码,包括类、实例和接口在初始化时使用的特殊方法。

+

+方法区也是在Java虚拟机启动时创建的。虽然方法区作为堆的一部分,但简单的Java虚拟机的实现可能不会对这部分区域进行gc操作和内存紧凑操作。Java虚拟机规范并不强制规范方法区的存储位置和管理已编译代码的策略。方法区可以是固定大小,也可以根据实际需要对方法区的大小进行拓展和紧凑操作。同时,方法区的内存不需要是连续的。

+

+Java虚拟机的实现应该提供让程序员和用户控制初始的方法区内存大小,并且,可以让方法区在最小值和最大值的限制范围内根据实际需要动态地拓展或收缩。

+

+以下异常情况与方法区相关:

+* 如果方法区不能提供满足需要分配的内存时,Java虚拟机抛出**OutOfMemoryError**。

+

+###运行时常量池(Runtime Constant Pool)

+**运行时常量池**是一个类或接口的class文件的中**constant_pool**表的运行时表示。它包含多种常量:编译期的数值型字面量,运行时的方法和字段的引用。运行时常量池提供类似于一个传统的编程语言中的符号表的功能,但它比典型的符号表包含了更广泛的范围中的数据。

+

+每个运行时常量池都是在Java虚拟机的方法区中进行分配的(也就是说运行时常量池是方法区中的一部分)。类/接口的运行时常量池在类/接口创建时被Java虚拟机构造。

+

+以下异常情况与类/接口的运行时常量池的创建相关:

+

+当创建一个类或接口时,如果运行时常量池的创建需要更多的内存,而方法区没有足够的内存可以提供时,Java虚拟机抛出**OutOfMemoryError**。

+

+###本地方法栈(Native Method Stacks)

+Java虚拟机应该使用传统的栈(通俗地将:C栈)来对**本地方法**(非Java实现的方法)进行支持。Native method stacks may also be used by the implementation of an interpreter for the Java Virtual Machine's instruction set in a language such as C. Java Virtual Machine implementations that cannot load native methods and that do not themselves rely on conventional stacks need not supply native method stacks. If supplied, native method stacks are typically allocated per thread when each thread is created.

+

+This specification permits native method stacks either to be of a fixed size or to dynamically expand and contract as required by the computation. If the native method stacks are of a fixed size, the size of each native method stack may be chosen independently when that stack is created.

+

+

+Java虚拟机的实现应当提供这样的功能:程序员和用户可以控制本地方法栈的初始化大小,并且,可以让本地方法栈在最小值和最大值的限制范围内根据实际需要动态地拓展或收缩。

+

+以下异常情况与本地方法栈相关:

+* 如果本地方法栈需要更多的内存,但无法进行分配时,Java虚拟机抛出**StackOverflowError**。

+

+

+If native method stacks can be dynamically expanded and native method stack expansion is attempted but insufficient memory can be made available, or if insufficient memory can be made available to create the initial native method stack for a new thread, the Java Virtual Machine throws an OutOfMemoryError.

+

+### 拓展阅读

+

+* 翻译自:http://docs.oracle.com/javase/specs/jvms/se8/html/jvms-2.html#jvms-2.5

+* understanding-jvm-internals: http://www.cubrid.org/blog/dev-platform/understanding-jvm-internals/

+* understanding-java-garbage-collection: http://www.cubrid.org/blog/dev-platform/understanding-java-garbage-collection/

\ No newline at end of file

diff --git a/31.Understanding Java Garbage Collection.md b/31.Understanding Java Garbage Collection.md

new file mode 100644

index 0000000..51beb95

--- /dev/null

+++ b/31.Understanding Java Garbage Collection.md

@@ -0,0 +1,120 @@

+#31.Understanding Java Garbage Collection

+

+理解GC(Garbage Collection)的工作原理对Java编程有什么益处呢?满足软件工程师的求知欲或许是一个不错的原因,但与此同时,也可以帮助你编写更加优秀的Java应用程序。

+

+这是我的个人的主观意见,但是我相信那些深谙GC的人往往更容易成为一个优秀的Java工程师。如果你对GC感兴趣,那么意味着你有不错的开发经验。如果你有过仔细选择合适的GC算法经验,这意味着你完全了解你开发应用程序的功能特点。当然,这也许只是优秀开发者的普遍衡量标准,然而我要说的是,要想成为一名优秀的开发者,理解GC是一门必修的课程。

+

+这篇文章的主要目的是以尽量简洁的方式向你讲解GC。我希望这篇文章能切切实实地对你有所帮助。回到正题,在GC中有个词汇**stop-the-word**,stop-the-word这个过程总会发生,无论你选择何种GC算法。stop-the-world意味着在**执行GC的过程中,JVM会中断所有的应用程序线程**( 除了GC需要的线程外)。被中断的线程会在GC完成后恢复。我们所关注的**GC调优就在于如何减少stop-the-world的运行时间**。

+

+## 垃圾收集器(Generational Garbage Collection)

+

+Java代码并不能显式地对内存进行分配和移除。有些人会将对象设置为null或者调用System.gc()方法来**显式**地移除内存空间。将对象设置为null没有大不了的,当调用System.gc()方法却会大大地响应系统的性能(我们并不需要这样做)。

+

+在Java中,开发者并不需要显式地在代码中释放内存,垃圾收集器会帮助我们找到不需要的对象并讲它们移除。垃圾收集器只所以被引入使用是基于以下两个假定前提:

+

+1. 大多数对象很快成为不可达状态;

+2. **老对象引用新对象**这种情况总是控制在很小的数量内。

+

+这两个假定前提被成为**弱世代假说(Weak generational hypothesis)**,基于这个假设,在HotSpot虚拟机中,内存(切确地说是Java Heap)被分为两种:**新生代(Young Generation)**与**老年代(Old Generation)**。

+

+新生代:绝大部分的新创建的对象都被分配到这里。由于大部分的对象很快会成为不可达状态,很多新创建的对象都分配到新生代,然后很快从这个区域被释放。对象从新生代被释放,我们称这个过程为**Minor GC**。

+

+老年代:当在新生代的对象没有成为不可达状态,并且从新生代存活下来后,我们会将这些对象复制到老年代。老年代的储存空间会比新生代的要大,所以在老年代发生GC的频率要远远低于在新生代的GC频率。对象从老年代被释放,我们称这个过程为**major GC**或**full GC**。

+

+我们看下以下两个图表:

+

+

+

+##30.1 运行时数据区(Runtime Data Areas)

+

+Java虚拟机为程序的运行提供了各种不同的数据区,部分数据区与JVM的生命周期一致(随JVM启动而分配,JVM退出而释放),而另一部分则跟线程绑定,随着线程的启动和退出进行分配和释放。

+

+###30.1.1 程序计数器(Program Counter Rigister)

+

+Java虚拟机支持多个线程在同一时刻运行。每一个Java虚拟机线程拥有自己的程序计数器。在任意时刻,任意线程都处于一个方法中(称为当前方法(current method)),如果是该方法是**非本地方法(not native method)**,则该程序计数器记录着**Java虚拟机执行的当前指令的地址**。而如果该方法为**本地方法(native method)**,则该程序计数器为undefined。

+

+###30.1.2 Java虚拟机栈(Java Virtual Machine Stacks)

+

+每个Java虚拟机线程都拥有各自的**Java虚拟机栈**,并与线程同时被创建。Java虚拟机栈用于存储**帧(frames)**。Java虚拟机栈跟传统语言的栈相似:存储局部变量、结果,同时参与方法的调用和返回。

+Because the Java Virtual Machine stack is never manipulated directly except to push and pop frames, frames may be heap allocated. The memory for a Java Virtual Machine stack does not need to be contiguous.

+

+在第一版的Java虚拟机规范中,**Java虚拟机栈**做为**Java栈** 为人们所熟知。这个规范允许Java虚拟机栈是固定的大小或根据需要通过计算来动态地扩展和收缩。如果Java虚拟机堆的大小是固定的,(If the Java Virtual Machine stacks are of a fixed size, the size of each Java Virtual Machine stack may be chosen independently when that stack is created.)

+

+Java虚拟的实现可以提供让程序员或用户来控制Java虚拟栈初始大小,并且,可以让Java虚拟机栈在最小值和最大值的限制范围内根据实际需要动态地拓展或收缩。

+

+以下异常情况与Java虚拟栈相关:

+* 如果线程需要的Java虚拟机栈大小超过限额,则抛出**StackOverflowError**。

+* 如果Java虚拟机栈被设置为可动态拓展,当拓展时由于受限于物理内存的限制而无法实现拓展时,则抛出**OutOfMemoryError**。

+

+###30.1.2 堆(Heap)

+

+Java虚拟机具有一个由所有Java虚拟机线程共享的一个堆。堆是**分配和储存所有的实例对象和数组的运行时数据区域**。

+

+堆是在Java虚拟机启动时被创建的。堆的对象储存空间通过**自动存储管理系统(garbage collector简称gc)**进行回收,并不能像c/c++语言那样显示地进行释放。Java虚拟机没有指定限制使用的gc,这个可以由程序员和用户根据自己的情况来进行选择。堆可以是固定大小的,也可以通过计算来动态地拓展和收缩。同时,堆的内容空间地址不需要是连续的。

+

+Java虚拟机的实现可以提供让程序员和用户控制初始的堆大小,并且,可以让栈在最小值和最大值的限制范围内根据实际需要动态地拓展或收缩。

+

+以下异常情况与堆相关:

+

+* 当堆需要拓展的内存大小大于自动存储管理系统所能提供的内存大小时,抛出**OutOfMemoryError**。

+

+### 方法区(Method Area)

+

+Java虚拟机拥有一个由所有Java虚拟机线程共享的方法区。方法区类似于传统语言的用于存储编译代码的内存区域。(The method area is analogous to the storage area for compiled code of a conventional language or analogous to the "text" segment in an operating system process. )它用于存储每个类的类结构,例如**运行时常量池(Runtime Constant Pool)**,字段和方法数据,以及方法和构造方法的代码,包括类、实例和接口在初始化时使用的特殊方法。

+

+方法区也是在Java虚拟机启动时创建的。虽然方法区作为堆的一部分,但简单的Java虚拟机的实现可能不会对这部分区域进行gc操作和内存紧凑操作。Java虚拟机规范并不强制规范方法区的存储位置和管理已编译代码的策略。方法区可以是固定大小,也可以根据实际需要对方法区的大小进行拓展和紧凑操作。同时,方法区的内存不需要是连续的。

+

+Java虚拟机的实现应该提供让程序员和用户控制初始的方法区内存大小,并且,可以让方法区在最小值和最大值的限制范围内根据实际需要动态地拓展或收缩。

+

+以下异常情况与方法区相关:

+* 如果方法区不能提供满足需要分配的内存时,Java虚拟机抛出**OutOfMemoryError**。

+

+###运行时常量池(Runtime Constant Pool)

+**运行时常量池**是一个类或接口的class文件的中**constant_pool**表的运行时表示。它包含多种常量:编译期的数值型字面量,运行时的方法和字段的引用。运行时常量池提供类似于一个传统的编程语言中的符号表的功能,但它比典型的符号表包含了更广泛的范围中的数据。

+

+每个运行时常量池都是在Java虚拟机的方法区中进行分配的(也就是说运行时常量池是方法区中的一部分)。类/接口的运行时常量池在类/接口创建时被Java虚拟机构造。

+

+以下异常情况与类/接口的运行时常量池的创建相关:

+

+当创建一个类或接口时,如果运行时常量池的创建需要更多的内存,而方法区没有足够的内存可以提供时,Java虚拟机抛出**OutOfMemoryError**。

+

+###本地方法栈(Native Method Stacks)

+Java虚拟机应该使用传统的栈(通俗地将:C栈)来对**本地方法**(非Java实现的方法)进行支持。Native method stacks may also be used by the implementation of an interpreter for the Java Virtual Machine's instruction set in a language such as C. Java Virtual Machine implementations that cannot load native methods and that do not themselves rely on conventional stacks need not supply native method stacks. If supplied, native method stacks are typically allocated per thread when each thread is created.

+

+This specification permits native method stacks either to be of a fixed size or to dynamically expand and contract as required by the computation. If the native method stacks are of a fixed size, the size of each native method stack may be chosen independently when that stack is created.

+

+

+Java虚拟机的实现应当提供这样的功能:程序员和用户可以控制本地方法栈的初始化大小,并且,可以让本地方法栈在最小值和最大值的限制范围内根据实际需要动态地拓展或收缩。

+

+以下异常情况与本地方法栈相关:

+* 如果本地方法栈需要更多的内存,但无法进行分配时,Java虚拟机抛出**StackOverflowError**。

+

+

+If native method stacks can be dynamically expanded and native method stack expansion is attempted but insufficient memory can be made available, or if insufficient memory can be made available to create the initial native method stack for a new thread, the Java Virtual Machine throws an OutOfMemoryError.

+

+### 拓展阅读

+

+* 翻译自:http://docs.oracle.com/javase/specs/jvms/se8/html/jvms-2.html#jvms-2.5

+* understanding-jvm-internals: http://www.cubrid.org/blog/dev-platform/understanding-jvm-internals/

+* understanding-java-garbage-collection: http://www.cubrid.org/blog/dev-platform/understanding-java-garbage-collection/

\ No newline at end of file

diff --git a/31.Understanding Java Garbage Collection.md b/31.Understanding Java Garbage Collection.md

new file mode 100644

index 0000000..51beb95

--- /dev/null

+++ b/31.Understanding Java Garbage Collection.md

@@ -0,0 +1,120 @@

+#31.Understanding Java Garbage Collection

+

+理解GC(Garbage Collection)的工作原理对Java编程有什么益处呢?满足软件工程师的求知欲或许是一个不错的原因,但与此同时,也可以帮助你编写更加优秀的Java应用程序。

+

+这是我的个人的主观意见,但是我相信那些深谙GC的人往往更容易成为一个优秀的Java工程师。如果你对GC感兴趣,那么意味着你有不错的开发经验。如果你有过仔细选择合适的GC算法经验,这意味着你完全了解你开发应用程序的功能特点。当然,这也许只是优秀开发者的普遍衡量标准,然而我要说的是,要想成为一名优秀的开发者,理解GC是一门必修的课程。

+

+这篇文章的主要目的是以尽量简洁的方式向你讲解GC。我希望这篇文章能切切实实地对你有所帮助。回到正题,在GC中有个词汇**stop-the-word**,stop-the-word这个过程总会发生,无论你选择何种GC算法。stop-the-world意味着在**执行GC的过程中,JVM会中断所有的应用程序线程**( 除了GC需要的线程外)。被中断的线程会在GC完成后恢复。我们所关注的**GC调优就在于如何减少stop-the-world的运行时间**。

+

+## 垃圾收集器(Generational Garbage Collection)

+

+Java代码并不能显式地对内存进行分配和移除。有些人会将对象设置为null或者调用System.gc()方法来**显式**地移除内存空间。将对象设置为null没有大不了的,当调用System.gc()方法却会大大地响应系统的性能(我们并不需要这样做)。

+

+在Java中,开发者并不需要显式地在代码中释放内存,垃圾收集器会帮助我们找到不需要的对象并讲它们移除。垃圾收集器只所以被引入使用是基于以下两个假定前提:

+

+1. 大多数对象很快成为不可达状态;

+2. **老对象引用新对象**这种情况总是控制在很小的数量内。

+

+这两个假定前提被成为**弱世代假说(Weak generational hypothesis)**,基于这个假设,在HotSpot虚拟机中,内存(切确地说是Java Heap)被分为两种:**新生代(Young Generation)**与**老年代(Old Generation)**。

+

+新生代:绝大部分的新创建的对象都被分配到这里。由于大部分的对象很快会成为不可达状态,很多新创建的对象都分配到新生代,然后很快从这个区域被释放。对象从新生代被释放,我们称这个过程为**Minor GC**。

+

+老年代:当在新生代的对象没有成为不可达状态,并且从新生代存活下来后,我们会将这些对象复制到老年代。老年代的储存空间会比新生代的要大,所以在老年代发生GC的频率要远远低于在新生代的GC频率。对象从老年代被释放,我们称这个过程为**major GC**或**full GC**。

+

+我们看下以下两个图表:

+

+ +

+以上图中**永久代(Permanent Generation)**被称为**方法区**,它用于存储class文件和运行时常量池。所以,这里的存储空间并不用于“收留”从老年代存活下来的对象。当GC可能会在这个区域发生,我们也把在这个区域发生的GC算作full GC。

+

+有些人会有疑问:当老年代的对象需要引用新生代的对象,这时候会发生什么情况?

+

+为了处理这些情况,在老年代中会有个叫做**卡表(card table)**的东西,它是一个512字节的数据块。在老年代的对象需要引用新生代的对象时,会被记录到这里。然后,当新生代的GC执行时,这个**card table**会被检查以确定对象是否应该被GC处理,这样做可以防止对老年代的所有对象进行遍历。这个卡表使用一个被称为**写屏障**的装置进行管理,它可以让minor GC的性能更加高效,虽然它本身也需要一定的开销,但是整体的开销却是减少的。

+

+

+

+以上图中**永久代(Permanent Generation)**被称为**方法区**,它用于存储class文件和运行时常量池。所以,这里的存储空间并不用于“收留”从老年代存活下来的对象。当GC可能会在这个区域发生,我们也把在这个区域发生的GC算作full GC。

+

+有些人会有疑问:当老年代的对象需要引用新生代的对象,这时候会发生什么情况?

+

+为了处理这些情况,在老年代中会有个叫做**卡表(card table)**的东西,它是一个512字节的数据块。在老年代的对象需要引用新生代的对象时,会被记录到这里。然后,当新生代的GC执行时,这个**card table**会被检查以确定对象是否应该被GC处理,这样做可以防止对老年代的所有对象进行遍历。这个卡表使用一个被称为**写屏障**的装置进行管理,它可以让minor GC的性能更加高效,虽然它本身也需要一定的开销,但是整体的开销却是减少的。

+

+ +

+## 新生代(Composition of the Young Generation)

+

+为了深入理解GC,我们来看一下新生代。新生代被划分为3个区域空间:

+

+* 一个伊甸园(One Eden Space)

+* 两个幸存区 (Two Survivor Spaces)

+

+这三个区域空间中,有两个是幸存区(Survivor Spaces)。每个区域空间的执行过程如下:

+

+1. 绝大多数新创建的对象都首先被分配到伊甸园(Eden Space)。

+2. 当伊甸园的GC执行以后,存活下来的对象会被移动到其中一个幸存区(这个幸存区存放着之前存活下来的对象)。

+3. 一旦幸存区满了以后,该幸存区存活下来的对象会移动到另外一个幸存区,然后该幸存区会重置为空状态。

+4. 在多次幸存区的GC执行后而存活下来的对象会被移动到老年代。

+

+在这个过程中,其中一个幸存区必须要保持为空状态。如果两个幸存区都是空状态或者都同时存在数据,你的系统一定出现了什么错误。

+

+数据通过minor GC并堆砌进入老年代的过程如下图所示:

+

+

+

+## 新生代(Composition of the Young Generation)

+

+为了深入理解GC,我们来看一下新生代。新生代被划分为3个区域空间:

+

+* 一个伊甸园(One Eden Space)

+* 两个幸存区 (Two Survivor Spaces)

+

+这三个区域空间中,有两个是幸存区(Survivor Spaces)。每个区域空间的执行过程如下:

+

+1. 绝大多数新创建的对象都首先被分配到伊甸园(Eden Space)。

+2. 当伊甸园的GC执行以后,存活下来的对象会被移动到其中一个幸存区(这个幸存区存放着之前存活下来的对象)。

+3. 一旦幸存区满了以后,该幸存区存活下来的对象会移动到另外一个幸存区,然后该幸存区会重置为空状态。

+4. 在多次幸存区的GC执行后而存活下来的对象会被移动到老年代。

+

+在这个过程中,其中一个幸存区必须要保持为空状态。如果两个幸存区都是空状态或者都同时存在数据,你的系统一定出现了什么错误。

+

+数据通过minor GC并堆砌进入老年代的过程如下图所示:

+

+ +

+在HotSpot虚拟机中,有两项被用于快速分配内存的技术。一种被成为**bump-the-pointer**,而另一种是所谓的线程局部缓冲器TLABs (Thread-Local Allocation Buffers)。

+

+Bump-the-pointer technique tracks the last object allocated to the Eden space. That object will be located on top of the Eden space. And if there is an object created afterwards, it checks only if the size of the object is suitable for the Eden space. If the said object seems right, it will be placed in the Eden space, and the new object goes on top. So, when new objects are created, only the lastly added object needs to be checked, which allows much faster memory allocations. However, it is a different story if we consider a multithreaded environment. To save objects used by multiple threads in the Eden space for Thread-Safe, an inevitable lock will occur and the performance will drop due to the lock-contention. TLABs is the solution to this problem in HotSpot VM. This allows each thread to have a small portion of its Eden space that corresponds to its own share. As each thread can only access to their own TLAB, even the bump-the-pointer technique will allow memory allocations without a lock.

+

+你并不需要技术以上提到的两种技术。你需要记住的是:当对象创建之后会首先分配到伊甸园空间,然后通过在幸存区的长时间存活被晋升到老年代空间。

+

+##老年代的GC(GC for the Old Generation)

+

+老年代基本在空间被沾满时才执行GC操作。GC的执行过程根据GC的类型不同而有所差异,如果你对不同的GC类型有所了解,则会明白其中的差异所在。

+

+根据JDK7,共有5中GC类型:

+

+* Serial GC

+* Parallel GC

+* Parallel Old GC (Parrallel Compacting GC)

+* Concurrent Mark & Sweep GC (or CMS)

+* Garbage First GC (G1)

+

+其中,串行GC不能使用的操作的服务器上。这种类型的GC创建时有在台式计算机上只有一个CPU核心。使用该系列GC将显著删除应用程序的性能。 使用这种将很明显地降低应用程序的性能。

+

+现在让我们来了解每种GC的类型:

+

+###串行GC( Serial GC (-XX:+UseSerialGC))

+

+(The GC in the young generation uses the type we explained in the previous paragraph. ?)

+在新生代中我们使用一种称为**标记-清除-紧凑(mark-sweep-compact)**的算法。

+

+这种算法的第一步就是对新生代的幸存对象进行标记。然后,它从堆的从前往后逐个清理不需要的对象。最后对幸存的对象进行紧凑,使它们在位于连续的内存空间。这个过程会将堆分为两部分:一部分有数据,一部分没数据。Serial GC适用于小的内存空间和少量的CPU核心的机器。

+

+###并行GC (Parallel GC (-XX:+UseParallelGC))

+

+

+

+在HotSpot虚拟机中,有两项被用于快速分配内存的技术。一种被成为**bump-the-pointer**,而另一种是所谓的线程局部缓冲器TLABs (Thread-Local Allocation Buffers)。

+

+Bump-the-pointer technique tracks the last object allocated to the Eden space. That object will be located on top of the Eden space. And if there is an object created afterwards, it checks only if the size of the object is suitable for the Eden space. If the said object seems right, it will be placed in the Eden space, and the new object goes on top. So, when new objects are created, only the lastly added object needs to be checked, which allows much faster memory allocations. However, it is a different story if we consider a multithreaded environment. To save objects used by multiple threads in the Eden space for Thread-Safe, an inevitable lock will occur and the performance will drop due to the lock-contention. TLABs is the solution to this problem in HotSpot VM. This allows each thread to have a small portion of its Eden space that corresponds to its own share. As each thread can only access to their own TLAB, even the bump-the-pointer technique will allow memory allocations without a lock.

+

+你并不需要技术以上提到的两种技术。你需要记住的是:当对象创建之后会首先分配到伊甸园空间,然后通过在幸存区的长时间存活被晋升到老年代空间。

+

+##老年代的GC(GC for the Old Generation)

+

+老年代基本在空间被沾满时才执行GC操作。GC的执行过程根据GC的类型不同而有所差异,如果你对不同的GC类型有所了解,则会明白其中的差异所在。

+

+根据JDK7,共有5中GC类型:

+

+* Serial GC

+* Parallel GC

+* Parallel Old GC (Parrallel Compacting GC)

+* Concurrent Mark & Sweep GC (or CMS)

+* Garbage First GC (G1)

+

+其中,串行GC不能使用的操作的服务器上。这种类型的GC创建时有在台式计算机上只有一个CPU核心。使用该系列GC将显著删除应用程序的性能。 使用这种将很明显地降低应用程序的性能。

+

+现在让我们来了解每种GC的类型:

+

+###串行GC( Serial GC (-XX:+UseSerialGC))

+

+(The GC in the young generation uses the type we explained in the previous paragraph. ?)

+在新生代中我们使用一种称为**标记-清除-紧凑(mark-sweep-compact)**的算法。

+

+这种算法的第一步就是对新生代的幸存对象进行标记。然后,它从堆的从前往后逐个清理不需要的对象。最后对幸存的对象进行紧凑,使它们在位于连续的内存空间。这个过程会将堆分为两部分:一部分有数据,一部分没数据。Serial GC适用于小的内存空间和少量的CPU核心的机器。

+

+###并行GC (Parallel GC (-XX:+UseParallelGC))

+

+ +

+ 看上图,你可以清楚看到Serial GC与Parallel之间的差异。Serial GC仅使用一个线程去执行GC过程,而Parallel GC会使用多个线程去执行GC过程,因此,可得到更加优秀的性能。当机器拥有很大的内存和较多的CPU核心时,Paraller GC会表现得非常不错。Parallel GC也被称为**throughput GC**。

+

+###Parallel Old GC

+

+Parallel Old GC从JDK 5 update版本开始得到支持。相比Parallel GC,唯一的区别在于:Parallel Old GC只工作于老年代。它通过三个步骤进行工作:标记-总结-紧凑。The summary step identifies the surviving objects separately for the areas that the GC have previously performed, and thus different from the sweep step of the mark-sweep-compact algorithm. It goes through a little more complicated steps.

+

+###Concurrent Mark & Sweep GC (or CMS)

+

+

+

+ 看上图,你可以清楚看到Serial GC与Parallel之间的差异。Serial GC仅使用一个线程去执行GC过程,而Parallel GC会使用多个线程去执行GC过程,因此,可得到更加优秀的性能。当机器拥有很大的内存和较多的CPU核心时,Paraller GC会表现得非常不错。Parallel GC也被称为**throughput GC**。

+

+###Parallel Old GC

+

+Parallel Old GC从JDK 5 update版本开始得到支持。相比Parallel GC,唯一的区别在于:Parallel Old GC只工作于老年代。它通过三个步骤进行工作:标记-总结-紧凑。The summary step identifies the surviving objects separately for the areas that the GC have previously performed, and thus different from the sweep step of the mark-sweep-compact algorithm. It goes through a little more complicated steps.

+

+###Concurrent Mark & Sweep GC (or CMS)

+

+ +

+ 如你上图所看的,Concurrent Mark-Sweep GC比之前介绍的几种GC都要复杂得多。早期的**初始标记阶**段很简单,它的主要功能是最接近根类加载器的对象进行标记,这个阶段的停顿时间十分短暂。在**并发标记**阶段,对刚刚幸存下来的对象的引用进行跟踪和检查,这个过程中,其他的JVM线程不会被中止(也就是没有stop-the-world)。在**重新标记**阶段,会对**并发标记**阶段新添加或停止的引用进行确认。最后,在**并发清除**阶段,对不可达对象进行清理工作(也就GC动作),这个过程,其他的JVM线程也不会被中止。由于这种GC工作方式,GC的停顿时间非常短暂。CMS GC也被称为**低延迟GC**,这对那些对响应时间有严格要求的应用程序是至关重要的。

+

+虽然这种GC垃圾收集的停顿时间非常短暂,但是他对内存大小和CPU内核数量与性能有着更高的要求。

+虽然这种GC类型具有极其短暂的停顿时间,但它也有以下缺点:

+

+* 对内存和CPU的要求更加高。

+* 不提供默认的内存紧凑步骤

+

+在使用这种GC之前,你需要仔细地review。此外,在内存紧凑阶段,如果存在大量的内存碎片,那么这种GC需要停顿时间可能会比其他的GC类型的要长。你需要仔细检查内存紧凑发生的频率和时间。

+

+###Garbage First GC (G1)

+

+最后,让我们来看下Garbage First GC(G1)

+

+

+

+ 如你上图所看的,Concurrent Mark-Sweep GC比之前介绍的几种GC都要复杂得多。早期的**初始标记阶**段很简单,它的主要功能是最接近根类加载器的对象进行标记,这个阶段的停顿时间十分短暂。在**并发标记**阶段,对刚刚幸存下来的对象的引用进行跟踪和检查,这个过程中,其他的JVM线程不会被中止(也就是没有stop-the-world)。在**重新标记**阶段,会对**并发标记**阶段新添加或停止的引用进行确认。最后,在**并发清除**阶段,对不可达对象进行清理工作(也就GC动作),这个过程,其他的JVM线程也不会被中止。由于这种GC工作方式,GC的停顿时间非常短暂。CMS GC也被称为**低延迟GC**,这对那些对响应时间有严格要求的应用程序是至关重要的。

+

+虽然这种GC垃圾收集的停顿时间非常短暂,但是他对内存大小和CPU内核数量与性能有着更高的要求。

+虽然这种GC类型具有极其短暂的停顿时间,但它也有以下缺点:

+

+* 对内存和CPU的要求更加高。

+* 不提供默认的内存紧凑步骤

+

+在使用这种GC之前,你需要仔细地review。此外,在内存紧凑阶段,如果存在大量的内存碎片,那么这种GC需要停顿时间可能会比其他的GC类型的要长。你需要仔细检查内存紧凑发生的频率和时间。

+

+###Garbage First GC (G1)

+

+最后,让我们来看下Garbage First GC(G1)

+

+ +

+如果你想要了解G1 GC,首先你要忘记关于新生代和老年代的一切知识点。Java堆(新生代&老年代)被划分为一个个大小固定的区域,对象被分派到这些区域中,如果一个区域被占满,则继续分配另外的区域,同时在后台维护一个优先列表,每次在允许的GC时间内,优先回收占用内存多的对象,这是就G1的来源。

+

+待续...

+

+## 参考文档

+* 翻译自:http://www.cubrid.org/blog/dev-platform/understanding-java-garbage-collection/

diff --git "a/32.\350\277\255\344\273\243\345\231\250\346\250\241\345\274\217.md" "b/32.\350\277\255\344\273\243\345\231\250\346\250\241\345\274\217.md"

new file mode 100644

index 0000000..2238327

--- /dev/null

+++ "b/32.\350\277\255\344\273\243\345\231\250\346\250\241\345\274\217.md"

@@ -0,0 +1,69 @@

+# 32.迭代器模式

+

+## 32.1 从Iterable接口说起

+

+ 在Java中的集合类接口有Set、List、Map、Queue,而这些接口的子类不计其数。以List接口为例,常用的实现类有:ArrayList、LinkedList、Stack、Vector。每个List子类实现的方式各不一样,ArrayList使用数组,LinkedList使用链表,Stack和Vector可以数组,甚至有些其他的集合类使用混合的数据结构。

+

+ 为了对外隐藏集合类的实现方式,保持良好的封装性,必须要统一这些集合类的迭代接口。同时调用者也无效关心集合类的底层实现方式,使用统一的接口即可对集合进行迭代。

+

+ 这不禁让人想到Java的`Iterable`接口,这也是迭代器模式在JDK中的经典实例。

+

+## 32.2 ArrayList实现Iterable接口

+

+Iterable.java 实现:

+```Java

+public interface Iterable {

+ Iterator iterator();

+}

+```

+Iterator.java 实现

+```Java

+public interface Iterator {

+ boolean hasNext();

+ E next();

+}

+```

+ArrayList.java 实现 Iterable接口

+```Java

+ @Override

+ public Iterator iterator() {

+ return new ArrayListIterator();

+ }

+```

+

+ArrayListIterator.java 实现

+```Java

+public class ArrayListIterator {

+ int cursor;

+ int lastRet = -1;

+

+ public boolean hasNext() {

+ return cursor != size;

+ }

+

+ public E next() {

+ int i = cursor;

+ if (i >= size)

+ throw new NoSuchElementException();

+ Object[] elementData = ArrayList.this.elementData;

+ cursor = i + 1;

+ return (E) elementData[lastRet = i];

+ }

+}

+```

+

+为了减少类文件和易于维护,我们通常把ArrayListIterator.java作为ArrayList的内部类,或者在ArrayList的iterator方法中世界采用匿名内部类,这里我们使用的外部类。

+

+## 32.3 迭代器模式

+

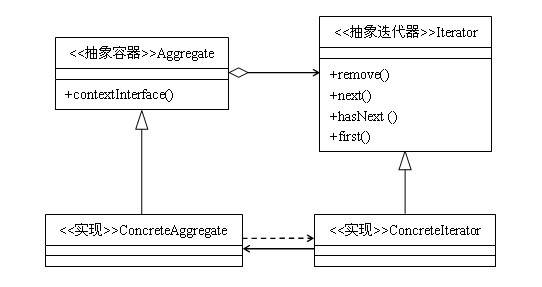

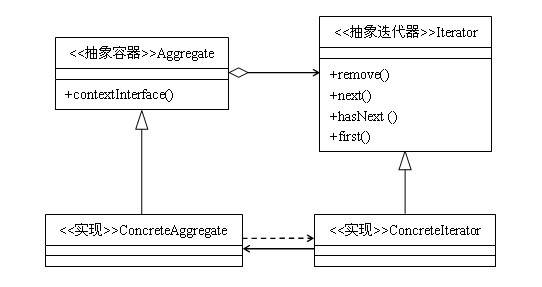

+**意图**:使用统一的方式遍历集合的各个元素,而无需暴露集合的内部结构。

+

+**使用场景**:

+

+* 为聚合类统一遍历接口;

+* 需要为聚合类提供多种遍历方式,例如二叉树的前序遍历,中序遍历,后续遍历,层次遍历;

+* 访问聚合对象的内容而无需暴露其内部结构;

+

+**类图**:

+

+

+

+如果你想要了解G1 GC,首先你要忘记关于新生代和老年代的一切知识点。Java堆(新生代&老年代)被划分为一个个大小固定的区域,对象被分派到这些区域中,如果一个区域被占满,则继续分配另外的区域,同时在后台维护一个优先列表,每次在允许的GC时间内,优先回收占用内存多的对象,这是就G1的来源。

+

+待续...

+

+## 参考文档

+* 翻译自:http://www.cubrid.org/blog/dev-platform/understanding-java-garbage-collection/

diff --git "a/32.\350\277\255\344\273\243\345\231\250\346\250\241\345\274\217.md" "b/32.\350\277\255\344\273\243\345\231\250\346\250\241\345\274\217.md"

new file mode 100644

index 0000000..2238327

--- /dev/null

+++ "b/32.\350\277\255\344\273\243\345\231\250\346\250\241\345\274\217.md"

@@ -0,0 +1,69 @@

+# 32.迭代器模式

+

+## 32.1 从Iterable接口说起

+

+ 在Java中的集合类接口有Set、List、Map、Queue,而这些接口的子类不计其数。以List接口为例,常用的实现类有:ArrayList、LinkedList、Stack、Vector。每个List子类实现的方式各不一样,ArrayList使用数组,LinkedList使用链表,Stack和Vector可以数组,甚至有些其他的集合类使用混合的数据结构。

+

+ 为了对外隐藏集合类的实现方式,保持良好的封装性,必须要统一这些集合类的迭代接口。同时调用者也无效关心集合类的底层实现方式,使用统一的接口即可对集合进行迭代。

+

+ 这不禁让人想到Java的`Iterable`接口,这也是迭代器模式在JDK中的经典实例。

+

+## 32.2 ArrayList实现Iterable接口

+

+Iterable.java 实现:

+```Java

+public interface Iterable {

+ Iterator iterator();

+}

+```

+Iterator.java 实现

+```Java

+public interface Iterator {

+ boolean hasNext();

+ E next();

+}

+```

+ArrayList.java 实现 Iterable接口

+```Java

+ @Override

+ public Iterator iterator() {

+ return new ArrayListIterator();

+ }

+```

+

+ArrayListIterator.java 实现

+```Java

+public class ArrayListIterator {

+ int cursor;

+ int lastRet = -1;

+

+ public boolean hasNext() {

+ return cursor != size;

+ }

+

+ public E next() {

+ int i = cursor;

+ if (i >= size)

+ throw new NoSuchElementException();

+ Object[] elementData = ArrayList.this.elementData;

+ cursor = i + 1;

+ return (E) elementData[lastRet = i];

+ }

+}

+```

+

+为了减少类文件和易于维护,我们通常把ArrayListIterator.java作为ArrayList的内部类,或者在ArrayList的iterator方法中世界采用匿名内部类,这里我们使用的外部类。

+

+## 32.3 迭代器模式

+

+**意图**:使用统一的方式遍历集合的各个元素,而无需暴露集合的内部结构。

+

+**使用场景**:

+

+* 为聚合类统一遍历接口;

+* 需要为聚合类提供多种遍历方式,例如二叉树的前序遍历,中序遍历,后续遍历,层次遍历;

+* 访问聚合对象的内容而无需暴露其内部结构;

+

+**类图**:

+

+ diff --git "a/33.\350\243\205\351\245\260\345\231\250\346\250\241\345\274\2172.md" "b/33.\350\243\205\351\245\260\345\231\250\346\250\241\345\274\2172.md"

new file mode 100644

index 0000000..fd930a4

--- /dev/null

+++ "b/33.\350\243\205\351\245\260\345\231\250\346\250\241\345\274\2172.md"

@@ -0,0 +1 @@

+# 33.装饰器模式2

diff --git "a/Java-Concurrency-Util/01.Java\345\271\266\345\217\221\345\267\245\345\205\267\347\261\273.md" "b/Java-Concurrency-Util/01.Java\345\271\266\345\217\221\345\267\245\345\205\267\347\261\273.md"

new file mode 100644

index 0000000..191a794

--- /dev/null

+++ "b/Java-Concurrency-Util/01.Java\345\271\266\345\217\221\345\267\245\345\205\267\347\261\273.md"

@@ -0,0 +1,23 @@

+#01.Java并发工具类

+

+Java 5 added a new Java package to the Java platform, the java.util.concurrent package. This package contains a set of classes that makes it easier to develop concurrent (multithreaded) applications in Java. Before this package was added, you would have to program your utility classes yourself.

+

+In this tutorial I will take you through the new java.util.concurrent classes, one by one, so you can learn how to use them. I will use the versions in Java 6. I am not sure if there are any differences to the Java 5 versions.

+

+I will not explain the core issues of concurrency in Java - the theory behind it, that is. If you are interested in that, check out my Java Concurrency tutorial.

+

+

+##Work in Progress

+

+This tutorial is very much "work in progress", so if you spot a missing class or interface, please be patient. I will add it when I get the time to do it.

+

+

+##Table of Contents

+

+Here is a list of the topics covered in this java.util.concurrent trail. This list (menu) is also present at the top right of every page in the trail.

+

+

+##Feel Free to Contact Me

+

+If you disagree with anything I write here about the java.util.concurrent utilities, or just have comments, questions, etc, feel free to send me an email. You wouldn't be the first to do so. You can find my email address on the about page.

+

diff --git "a/Java-Concurrency-Util/02.\351\230\273\345\241\236\351\230\237\345\210\227\357\274\210BlockingQueue\357\274\211.md" "b/Java-Concurrency-Util/02.\351\230\273\345\241\236\351\230\237\345\210\227\357\274\210BlockingQueue\357\274\211.md"

new file mode 100644

index 0000000..0454d9d

--- /dev/null

+++ "b/Java-Concurrency-Util/02.\351\230\273\345\241\236\351\230\237\345\210\227\357\274\210BlockingQueue\357\274\211.md"

@@ -0,0 +1,118 @@

+#02.阻塞队列(BlockingQueue)

+

+在Java `java.util.concurrent`包中的*BlockingQueue*是一个线程安全的阻塞队列接口,在这个接口中,入列和出类的操作都是线程安全的。

+

+##BlockingQueue用法(BlockingQueue Usage)

+

+阻塞队列(BlockingQueue)通常被用于**生产消费者模式**。看下面这张图:

+

+

+

+**生产者线程**生产新的对象,并把对象插入到队列中,直到队列中元素达到上限。如果阻塞队列达到了上限,则尝试插入对象的生产者线程会进入阻塞状态,它们会一直阻塞直到有**消费者线程**从队列中取出对象。

+

+**消费者线程**会持续从阻塞队列中取出对象并进行相应处理。如果消费者线程试图从空的阻塞队列中取出对象,则会进入阻塞状态,直到有生产者线程向队列中插入对象。

+

+##BlockingQueue的方法(BlockingQueue Methods)

+

+*BlockingQueue*分别有四种用于**入列**和**出列**的方法。每种方法都有不同的处理行为用以处理不同的应用场景:

+

+||Throws Exception| Special Value| Blocks| Times Out

+ ------|--------------|------------|--------------|----------------

+Insert | add(o) | offer(o) | put(o) |offer(o, timeout, timeunit)

+Remove | remove(o) | poll(o)| take(o) |poll(timeout, timeunit)

+Examine | element(o) | peek(o) | |

+

+

+四种不同的行为含义:

+

+* 抛出异常(Throws Exception):

+如果尝试的操作不能立即执行则抛出异常。

+* 特殊值(Special Value):

+如果尝试的操作不能立即执行,则返回特殊值(通常为true/false)。

+* 阻塞(Blocks):

+如果尝试的操作不能立即执行,则方法进入阻塞直到能够执行。

+* 超时(Times Out):

+如果尝试的方法不能立即执行,则方法进入阻塞直到能够执行,但如果阻塞超过设置的超时时间,则返回一个特殊值指明操作是否成功执行(通常为true/false)

+

+往*BlockingQueue*中插入null是不可能的,如果你往*BlockingQueue*中插入null,则会抛出*NullPointerException*异常。

+

+获取*BlockingQueue*中任意的元素都是可以的,不仅限于队列的头部或尾部。举个例子,如果你已经将一个任务插入队列,但你现在想取消这个任务,你可以通过类似`remove(task)`的方法来删除特定的在*BlockingQueue*中的元素。然而,这些操作都并非高性能的,除非迫不得已,不要调用这些方法。

+

+##BlockingQueue的实现类(BlockingQueue Implementations)

+

+由于*BlockingQueue*只是一个接口,所以我们要用时,应该选择具体的实现类。在Java 6的*java.util.concurrent*包中包含以下*BlockingQueue*的实现类:

+

+* ArrayBlockingQueue

+* DelayQueue

+* LinkedBlockingQueue

+* PriorityBlockingQueue

+* SynchronousQueue

+

+##BlockingQueue示例(Java BlockingQueue Example)

+

+下面是一个*BlockingQueue*的例子,这个例子中使用了实现*BlockingQueue*接口的*ArrayBlockingQueue*类。

+

+首先,这个*BlockingQueueExample*类中,分别启动了一个*Producer*和一个*Consumer*线程。*Producer*线程往共享的阻塞队列中插入数据,而*Consumer*线程从阻塞队列中取出数据并进行相应处理:

+

+```Java

+public class BlockingQueueExample {

+

+ public static void main(String[] args) throws Exception {

+ BlockingQueue queue = new ArrayBlockingQueue(1024);

+

+ Producer producer = new Producer(queue);

+ Consumer consumer = new Consumer(queue);

+

+ new Thread(producer).start();

+ new Thread(consumer).start();

+

+ Thread.sleep(4000);

+ }

+}

+```

+

+下面是*Producer*类的实现。注意这里的每个`put()`方法间线程都休眠了1s。这会导致等待队列元素的*Consumer*线程阻塞。

+

+```Java

+public class Producer implements Runnable{

+ protected BlockingQueue queue = null;

+

+ public Producer(BlockingQueue queue) {

+ this.queue = queue;

+ }

+

+ public void run() {

+ try {

+ queue.put("1");

+ Thread.sleep(1000);

+ queue.put("2");

+ Thread.sleep(1000);

+ queue.put("3");

+ } catch (InterruptedException e) {

+ e.printStackTrace();

+ }

+ }

+}

+```

+

+下面是*Consumer*类的实现,它仅仅只是连续从队列中取出三个元素并进行打印:

+

+```Java

+public class Consumer implements Runnable{

+ protected BlockingQueue queue = null;

+

+ public Consumer(BlockingQueue queue) {

+ this.queue = queue;

+ }

+

+ public void run() {

+ try {

+ System.out.println(queue.take());

+ System.out.println(queue.take());

+ System.out.println(queue.take());

+ } catch (InterruptedException e) {

+ e.printStackTrace();

+ }

+ }

+}

+```

\ No newline at end of file

diff --git a/Java-Concurrency-Util/03.ArrayBlockingQueue.md b/Java-Concurrency-Util/03.ArrayBlockingQueue.md

new file mode 100644

index 0000000..d85e550

--- /dev/null

+++ b/Java-Concurrency-Util/03.ArrayBlockingQueue.md

@@ -0,0 +1,15 @@

+#03.ArrayBlockingQueue

+

+*ArrayBlockingQueue*类实现了*BlockingQueue*接口。*ArrayBlockingQueue*是一个有界的阻塞队列,其内部维护了一个数组用于存储元素。有界意味着*ArrayBlockingQueue*不能存储无限量的元素。在同一时间内*ArrayBlockingQueue*存储的元素有一个上限,我们可以在初始化*ArrayBlockingQueue*时设置这个上限,而在此之后无法进行修改这个上限。

+

+*ArrayBlockingQueue*存储元素时遵循FIFO(先进先出)原则。在队列头部的元素的最先入列的元素,而在队列尾部的元素则是最新入列的元素。

+

+下面的例子演示了如何初始化*ArrayBlockingQueue*类:

+

+```Java

+BlockingQueue queue = new ArrayBlockingQueue(1024);

+

+queue.put("1");

+

+String string = queue.take();

+```

\ No newline at end of file

diff --git "a/Java-Concurrency-Util/04.DelayQueue\357\274\210\345\273\266\346\227\266\351\230\237\345\210\227\357\274\211.md" "b/Java-Concurrency-Util/04.DelayQueue\357\274\210\345\273\266\346\227\266\351\230\237\345\210\227\357\274\211.md"

new file mode 100644

index 0000000..d80e353

--- /dev/null

+++ "b/Java-Concurrency-Util/04.DelayQueue\357\274\210\345\273\266\346\227\266\351\230\237\345\210\227\357\274\211.md"

@@ -0,0 +1,47 @@

+04.DelayQueue(延时队列)

+

+DelayQueue class implements the BlockingQueue interface. Read the BlockingQueue text for more information about the interface.

+

+The DelayQueue keeps the elements internally until a certain delay has expired. The elements must implement the interface java.util.concurrent.Delayed. Here is how the interface looks:

+

+```Java

+public interface Delayed extends Comparable unbounded = new LinkedBlockingQueue();

+BlockingQueue bounded = new LinkedBlockingQueue(1024);

+

+bounded.put("Value");

+

+String value = bounded.take();

+```

\ No newline at end of file

diff --git a/Java-Concurrency-Util/06.PriorityBlockingQueue.md b/Java-Concurrency-Util/06.PriorityBlockingQueue.md

new file mode 100644

index 0000000..9f3d012

--- /dev/null

+++ b/Java-Concurrency-Util/06.PriorityBlockingQueue.md

@@ -0,0 +1,22 @@

+#06.PriorityBlockingQueue

+

+The PriorityBlockingQueue class implements the BlockingQueue interface. Read the BlockingQueue text for more information about the interface.

+

+The PriorityBlockingQueue is an unbounded concurrent queue. It uses the same ordering rules as the java.util.PriorityQueue class. You cannot insert null into this queue.

+

+All elements inserted into the PriorityBlockingQueue must implement the java.lang.Comparable interface. The elements thus order themselves according to whatever priority you decide in your Comparable implementation.

+

+Notice that the PriorityBlockingQueue does not enforce any specific behaviour for elements that have equal priority (compare() == 0).

+

+Also notice, that in case you obtain an Iterator from a PriorityBlockingQueue, the Iterator does not guarantee to iterate the elements in priority order.

+

+Here is an example of how to use the PriorityBlockingQueue:

+

+```Java

+BlockingQueue queue = new PriorityBlockingQueue();

+

+//String implements java.lang.Comparable

+queue.put("Value");

+

+String value = queue.take();

+```

diff --git a/Java-Concurrency-Util/README.md b/Java-Concurrency-Util/README.md

new file mode 100644

index 0000000..d1d2694

--- /dev/null

+++ b/Java-Concurrency-Util/README.md

@@ -0,0 +1 @@

+翻译自:http://tutorials.jenkov.com/java-util-concurrent/index.html

diff --git "a/Java-Concurrency/01.Java \345\271\266\345\217\221\344\270\216\345\244\232\347\272\277\347\250\213.md" "b/Java-Concurrency/01.Java \345\271\266\345\217\221\344\270\216\345\244\232\347\272\277\347\250\213.md"

new file mode 100644

index 0000000..b024e2c

--- /dev/null

+++ "b/Java-Concurrency/01.Java \345\271\266\345\217\221\344\270\216\345\244\232\347\272\277\347\250\213.md"

@@ -0,0 +1,20 @@

+# 01.Java 并发与多线程

+

+在以前,一台计算机只有一个CPU,而且在同一时间只能执行一个应用程序。后来引入了多任务的概念,这意味着计算机能再同一时间内执行多个应用程序。虽然,这并不是真正意义上的“同时”。多个应用程序共享计算机的CPU,操作系统在极小的时间切片内对应用程序进行切换以获得CPU资源。

+

+多任务的引入对软件开发者带来的新的挑战。应用程序不再能占用所有的CPU时间和所有的内存以及其他计算机资源。同时,一个好的应用程序在退出之后应该释放所有的系统系统以供其它应用程序使用。

+

+不久之后,多线程的概念被引入,这意味着,在一个应用程序中可以拥有多个执行线程。A thread of execution can be thought of as a CPU executing the program. 当一个应用程序有多个线程执行时,它就像拥有多个CPU在执行任务。

+

+多线程比多任务带来的挑战更加巨大。多线程意味着,在一个应用程序内部,可以存在多个线程同时地对内存进行读写操作。它会出现一些在单线程中永远不会出现的错误。有些错误也许在单个CPU的计算机上也不会出现(因为在单个CPU的计算机上,两个线程永远不可能真正意义上的同时执行)。现代计算机,基本都拥有多个CPU内核,线程可以通过独占内核来实现真正意义上的并行。

+

+如果一个线程往一块内存读取数据,而同时另一个线程往同样的地址写入数据,那么第一个线程读取的值是多少?原来的值?或是被第二个线程写入的值?或是两者混合的值?再举个例子,如果有两个线程同时往一块内存地址写入数据,那么这块内存最终的值是什么?第一个线程写入的值?还是第二个线程写入的值?还是两者的混合?如果没有恰当的预防措施,所有的这些结果都是可能的。线程的执行行为不能预测,所以最终的计算结果也跟着不同。

+

+

+##Java的多线程与并发(Multithreading and Concurrency in Java)

+

+Java was one of the first languages to make multithreading easily available to developers. Java had multithreading capabilities from the very beginning. Therefore, Java developers often face the problems described above. That is the reason I am writing this trail on Java concurrency. As notes to myself, and any fellow Java developer whom may benefit from it.

+

+The trail will primarily be concerned with multithreading in Java, but some of the problems occurring in multithreading are similar to problems occurring in multitasking and in distributed systems. References to multitasking and distributed systems may therefore occur in this trail too. Hence the word "concurrency" rather than "multithreading".

+

+This trail is still work in progress. Texts will be published whenver time is available to write them. Below is a list of the current texts in this trail. The list is also repeated at the top right of every page in the trail.

\ No newline at end of file

diff --git "a/Java-Concurrency/02.\345\244\232\347\272\277\347\250\213\347\232\204\345\245\275\345\244\204.md" "b/Java-Concurrency/02.\345\244\232\347\272\277\347\250\213\347\232\204\345\245\275\345\244\204.md"

new file mode 100644

index 0000000..5389b28

--- /dev/null

+++ "b/Java-Concurrency/02.\345\244\232\347\272\277\347\250\213\347\232\204\345\245\275\345\244\204.md"

@@ -0,0 +1,66 @@

+#02.多线程的好处

+

+尽管多线程带来的一些挑战,也让先写应用程序变得复杂,但它也带来了一系列好处:

+

+* 更好的资源利用率

+* 更简单的程序设计

+* More responsive programs

+

+

+##更好的资源利用率(Better resource utilization)

+

+试想一下,一个读取和处理本地系统文件的应用程序。比方说,从磁盘读取AF文件需要5秒,处理需要2秒,处理两个文件过程如下:

+

+```

+读取文件A消耗5s

+处理文件A消耗2s

+读取文件B消耗5s

+处理文件B消耗2s

+-------------

+总共消耗时间14s

+```

+

+当从磁盘读取文件时,大部分的CPU时间都消耗在了等待磁盘读取数据,而在这段时间内,CPU大部分时间内都是空闲的,它原本可以用来做其他操作。改变操作的顺序,可以让CPU得到更高的利用率。如下所示:

+

+```

+读取文件A消耗5s

+读取文件B消耗5s + 处理文件A消耗2s

+处理文件B消耗2s

+-------------

+总共消耗时间12s

+```

+

+当CPU读取往文件A后,则紧随着读取文件B,在此同时处理文件A。需要谨记的是,在等带磁盘读取文件时,CPU大部分时间都是空闲的。

+

+一般来说,在CPU等待IO操作时可以处理其他任务。IO操作可以是磁盘IO,网络IO或者用户的输入。磁盘IO和网络IO远远慢于CPU IO和内存IO。

+

+

+##更简单的程序设计(Simpler Program Design)

+

+If you were to program the above ordering of reading and processing by hand in a singlethreaded application, you would have to keep track of both the read and processing state of each file. Instead you can start two threads that each just reads and processes a single file. Each of these threads will be blocked while waiting for the disk to read its file. While waiting, other threads can use the CPU to process the parts of the file they have already read. The result is, that the disk is kept busy at all times, reading from various files into memory. This results in a better utilization of both the disk and the CPU. It is also easier to program, since each thread only has to keep track of a single file.

+

+##更具响应性的程序(More responsive programs)

+

+把单线程应用转化为多线程应用的另一个目标就是实现更具有响应性的应用程序。试想一下,监听某个端口请求的服务器应用程序,当请求到达时,应用程序进行处理,然后返回继续监听。程序设计勾勒如下:

+

+```Java

+while(server is active){

+ listen for request

+ process request

+}

+```

+

+如果请求需要很长的处理时间,在这段期间内,应用程序不能处理后续的请求,只有当应用程序处理请求返回监听状态,才能继续接收请求。

+

+另一种设计就是监听线程接收请求,然后将请求传递给工作线程进行处理,并立即返回到监听状态。工作线程对请求进行处理,然后将结果响应给客户端。这种设计勾勒如下:

+

+ ```Java

+while(server is active){

+ listen for request

+ hand request to worker thread

+}

+```

+

+在这种方式下,服务器线程将很快回到监听状态。因此,可以响应更多的用户请求。服务器变成更具有响应性。

+

+The same is true for desktop applications. If you click a button that starts a long task, and the thread executing the task is the thread updating the windows, buttons etc., then the application will appear unresponsive while the task executes. Instead the task can be handed off to a worker thread. While the worker thread is busy with the task, the window thread is free to respond to other user requests. When the worker thread is done it signals the window thread. The window thread can then update the application windows with the result of the task. The program with the worker thread design will appear more responsive to the user.

diff --git "a/Java-Concurrency/03.\345\244\232\347\272\277\347\250\213\347\232\204\346\210\220\346\234\254.md" "b/Java-Concurrency/03.\345\244\232\347\272\277\347\250\213\347\232\204\346\210\220\346\234\254.md"

new file mode 100644

index 0000000..b83143f

--- /dev/null

+++ "b/Java-Concurrency/03.\345\244\232\347\272\277\347\250\213\347\232\204\346\210\220\346\234\254.md"

@@ -0,0 +1,21 @@

+#03.多线程的代价

+

+Going from a singlethreaded to a multithreaded application doesn't just provide benefits. It also has some costs. Don't just multithread-enable an application just because you can. You should have a good idea that the benefits gained by doing so, are larger than the costs. When in doubt, try measuring the performance or responsiveness of the application, instead of just guessing.

+

+##设计更加复杂(More complex design)

+

+Though some parts of a multithreaded applications is simpler than a singlethreaded application, other parts are more complex. Code executed by multiple threads accessing shared data need special attention. Thread interaction is far from always simple. Errors arising from incorrect thread synchronization can be very hard to detect, reproduce and fix.

+

+##上下文切换的开销(Context Switching Overhead)

+

+When a CPU switches from executing one thread to executing another, the CPU needs to save the local data, program pointer etc. of the current thread, and load the local data, program pointer etc. of the next thread to execute. This switch is called a "context switch". The CPU switches from executing in the context of one thread to executing in the context of another.

+

+Context switching isn't cheap. You don't want to switch between threads more than necessary.

+

+You can read more about context switching on Wikipedia:

+

+http://en.wikipedia.org/wiki/Context_switch

+

+##增加资源消耗(Increased Resource Consumption)

+

+A thread needs some resources from the computer in order to run. Besides CPU time a thread needs some memory to keep its local stack. It may also take up some resources inside the operating system needed to manage the thread. Try creating a program that creates 100 threads that does nothing but wait, and see how much memory the application takes when running.

\ No newline at end of file

diff --git "a/Java-Concurrency/04.\345\210\233\345\273\272\345\222\214\345\220\257\345\212\250Java\347\272\277\347\250\213.md" "b/Java-Concurrency/04.\345\210\233\345\273\272\345\222\214\345\220\257\345\212\250Java\347\272\277\347\250\213.md"

new file mode 100644

index 0000000..efcec90

--- /dev/null

+++ "b/Java-Concurrency/04.\345\210\233\345\273\272\345\222\214\345\220\257\345\212\250Java\347\272\277\347\250\213.md"

@@ -0,0 +1,161 @@

+#04.创建和启动Java线程

+

+Java线程对象与其它的对象相似。线程对象是`java.lang.Thread`的实例,或是`java.lang.Thread`的子类的实例。跟普通对象不同,线程对象可以执行代码。

+

+##创建和启动线程(Creating and Starting Threads)

+

+在Java中,可以使用以下方式创建线程:

+

+```Java

+Thread thread = new Thread();

+```

+

+调用`Thread.start()`可以启动线程:

+

+```Java

+thread.start();

+```

+

+这个例子并没有为线程指定要执行的代码,所以它会很快返回并停止。

+

+有两种方法可以为线程指定需要执行的代码。第一种方式是创建`Thread`的子类并重写`run()`方法;第二种方式是将实现`Runnable`接口的对象作为构造参数传给`Thread(Runnale r)`。

+

+##继承Thread类(Thread Subclass)

+

+第一种为线程指定执行代码的方法:**继承Thread类并重写run方法。** `run()`方法在调用`Thread.start()`后执行。例子:

+

+```Java

+public class MyThread extends Thread {

+ public void run(){

+ System.out.println("MyThread running");

+ }

+}

+```

+

+通过下面的代码创建并执行线程:

+

+```

+ MyThread myThread = new MyThread();

+ myTread.start();

+```

+

+`start()`方法的调用会立即返回,并不会等待`run()`方法的执行,就好像这段代码被其他的CPU执行一样。

+

+你还可以使用匿名子类来创建线程对象:

+

+```Java

+Thread thread = new Thread(){

+ public void run(){

+ System.out.println("Thread Running");

+ }

+}

+

+thread.start();

+```

+

+##实现Runnable接口(Runnable Interface Implemention)

+

+第二种为线程指定执行代码的方法:创建实现`java.lang.Runnable`接口的对象,然后把该对象交给Thread执行。

+

+MyRunnable类实现Runnable接口:

+

+```Java

+public class MyRunnable implements Runnable {

+ public void run(){

+ System.out.println("MyRunnable running");

+ }

+}

+```

+

+将MyRunnable的实例作为构造参数传给Thread,然后通过`thread.start()`启动线程:

+

+```Java

+Thread thread = new Thread(new MyRunnable());

+thread.start();

+```

+

+当线程启动后,它会调用MyRunnable实例的`run()` 方法而不是自身的`run()`方法。

+

+

+同样,你可以通过匿名Runnable类来实现:

+

+````Java

+Runnable myRunnable = new Runnable(){

+

+ public void run(){

+ System.out.println("Runnable running");

+ }

+}

+

+Thread thread = new Thread(myRunnable);

+thread.start();

+```

+

+##子类还是实现接口(Subclass or Runnable)?

+

+There are no rules about which of the two methods that is the best. Both methods works. Personally though, I prefer implementing Runnable, and handing an instance of the implementation to a Thread instance. When having the Runnable's executed by a thread pool it is easy to queue up the Runnable instances until a thread from the pool is idle. This is a little harder to do with Thread subclasses.

+

+Sometimes you may have to implement Runnable as well as subclass Thread. For instance, if creating a subclass of Thread that can execute more than one Runnable. This is typically the case when implementing a thread pool. ??? Thread本身已经实现Runnable接口,这段话如何理解??

+

+##常见陷阱:(Common Pitfall: Calling run() instead of start())

+

+一个常见的陷阱就是调用`run()`方法来启动线程:

+

+```Java

+Thread newThread = new Thread(MyRunnable());

+thread.run(); //should be start();

+```

+

+这段代码的`run()`会正常执行,然而,它并不是由新创建的线程执行的,而是由当前线程执行。如果要创建新的线程来执行,必须调用`start()`方法而不是`run()`方法。

+

+

+##线程名称(Thread Names)

+

+ 当创建线程时,可以对线程命名,通过对线程命名可以用来区分不同的线程。举个例子,有多个线程通过`System.out`写内容到控制台,那么可以通过名字很方便地区分不同的线程:

+

+```Java

+Thread thread = new Thread("New Thread") {

+ public void run(){

+ System.out.println("run by: " + getname());

+ }

+};

+

+thread.start();

+System.out.println(thread.getName());

+```

+字符串“New Thread”通过构造函数传给Thread,这个就是线程的名字。可以通过`getName()`获取线程的名字。使用Runnable接口时,可以通过如下方式进行命名:

+

+```Java

+MyRunnable runnable = new MyRunnable();

+Thread thread = new Thread(runnable, "New Thread");

+

+thread.start();

+System.out.println(thread.getName());

+```

+

+获取当前线程的名字,可以通过一下方式获取:

+

+```Java

+String threadName = Thread.currentThread().getName();

+```

+##Java线程示例(Java Thread Example)

+

+下面的线程例子。首先打印执行main方法线程的名字(这个线程由JVM分配)。然后启动10个线程,并递增赋予它们名字i,每个线程打印自己的名字,最后停止:

+

+```Java

+public class ThreadExample {

+

+ public static void main(String[] args){

+ System.out.println(Thread.currentThread().getName());

+ for(int i=0; i<10; i++){

+ new Thread("" + i){

+ public void run(){

+ System.out.println("Thread: " + getName() + " running");

+ }

+ }.start();

+ }

+ }

+}

+```

+

+值得注意的是,虽然线程是依次按1,2,3启动,但是它们的执行却不是顺序的,也就说第一个启动的线程,并不一定第一个打印输出。这是因为线程**原则上**是并行执行而不是顺序执行,由JVM或操作系统来决定线程的执行顺序,这个顺序并不需要与它们的启动顺序一致。

\ No newline at end of file

diff --git "a/Java-Concurrency/05.\347\253\236\346\200\201\346\235\241\344\273\266\345\222\214\344\270\264\347\225\214\345\214\272.md" "b/Java-Concurrency/05.\347\253\236\346\200\201\346\235\241\344\273\266\345\222\214\344\270\264\347\225\214\345\214\272.md"

new file mode 100644

index 0000000..3aa7154

--- /dev/null

+++ "b/Java-Concurrency/05.\347\253\236\346\200\201\346\235\241\344\273\266\345\222\214\344\270\264\347\225\214\345\214\272.md"

@@ -0,0 +1,46 @@

+

+#05.竞态条件和临界区(Race Conditions and Critical Sections)

+

+在一个应用程序中运行多个线程这本身不会导致什么问题。问题在于多个线程同时对同一资源进行存取,例如同样的内存空间(变量、数组或对象),系统资源(数据库,文件系统等等)。如果是多个线程对同一资源进行读取,则不会有任何问题。

+

+以下的代码,如果有多个线程同时执行,则会导致问题:

+

+```Java

+public class Counter {

+ protected long count = 0;

+

+ public void add(long value){

+ this.count = this.count + value;

+ }

+}

+```

+

+想象一下,有两个线程:`A`和`B`,同时执行Counter类的实例的一个`add()`方法。操作系统中的线程调度如何进行,我们是无法进行预测的。并且,这个方法的代码在JVM内部并不是作为一个单独的指令执行的,而是有如下步骤:

+

+```

+get this.count from memory into register

+add value to register

+write register to memory

+```

+

+观察下面A线程和B线程的运行过程和结果:

+

+```

+this.count = 0;

+A: reads this.count into a register (0)

+B: reads this.count into a register (0)

+B: adds value 2 to register

+B: writes register value (2) back to memory. this.count now equals 2

+A: adds value 3 to register

+A: writes register value (3) back to memory. this.count now equals 3

+ ```

+

+ 线程A和线程B分别加2和3到counter中,在正常情况下,counter的结果应该为5。然而,由于两个线程的执行是互相交织的,两个线程同时从内存中读取0值到寄存器。然后它们分别把2和3跟0相加,最后由线程A把寄存器中的值写回到内存中,所以执行的最后结果是3。在上面的例子中,最后由线程A把3写到内存中,而实际上也可能是线程B。如果没有适当的同步机制,那么我们无从知晓这两个线程间到底如何交织执行。

+

+

+

+## 竞态条件和临界点(Race Conditions & Critical Sections)

+

+当多个线程对同一个资源进行竞争,访问这个资源的顺序是非常重要的,称之为**竞态条件**(he situation where two threads compete for the same resource, where the sequence in which the resource is accessed is significant, is called race conditions)。可以引起竞态条件的代码区域,称之为**临界区**。在前面的示例中,`add()`方法就是一个临界区。竞态条件可以通过在临界区进行适当的**线程同步**来避免。

+

+

diff --git "a/Java-Concurrency/06.\347\272\277\347\250\213\345\256\211\345\205\250\344\270\216\350\265\204\346\272\220\345\205\261\344\272\253.md" "b/Java-Concurrency/06.\347\272\277\347\250\213\345\256\211\345\205\250\344\270\216\350\265\204\346\272\220\345\205\261\344\272\253.md"

new file mode 100644

index 0000000..96a66b9

--- /dev/null

+++ "b/Java-Concurrency/06.\347\272\277\347\250\213\345\256\211\345\205\250\344\270\216\350\265\204\346\272\220\345\205\261\344\272\253.md"

@@ -0,0 +1,117 @@

+#06.线程安全与资源共享(Thread Safety and Shared Resources)

+

+

+如果一段代码可以安全地由多个线程并行调用,则称这段代码是**线程安全**的。如果一段代码是线程安全的,则这段代码不会引起**竞态条件**。只有当多个线程更新共享资源时才会发生**竞态条件**问题。因此,重要是要知道程序执行过程中哪些资源是线程共享的。

+

+##局部变量(Local Variables)

+

+局部变量存储在各自线程的栈中,这意味着局部变量不是线程共享的,这同样意味着所有的局部基本类型变量都是线程安全的。如下代码代码线程安全的:

+

+```Java

+public void someMethod(){

+

+ long threadSafeInt = 0;

+

+ threadSafeInt++;

+}

+```

+

+## 局部对象引用(Local Object References)

+

+ 局部对象的引用有一些差异。引用本身是不同享的,然而,引用的对象并不是存储在线程栈中,所有的对象都存储在Java堆,如果一个对象始终不逃逸出创建它的方法作用域之外,则它是线程安全的(If an object created locally never escapes the method it was created in, it is thread safe. )。而实际上,你也可以把这个对象引用传递给其他对象或者方法,只要传递的对象没有被其他线程处理,则它也是线程安全的。

+

+下面这个例子中的局部对象是线程安全的:

+

+```Java

+public void someMethod(){

+ LocalObject localObject = new LocalObject();

+

+ localObject.callMethod();

+ method2(localObject);

+}

+

+public void method2(LocalObject localObject){

+ localObject.setValue("value");

+}

+```

+

+在这个例子中,LocalObject的实例并没有从`someMethod()`方法中返回,也没有传递给其他在`someMethod()`作用域外的对象。每个执行`someMethod()`的线程都会创建各自的LocalObject实例,然后将引用传递给localObject引用变量。因此,这里的LocalObject的使用是线程安全的,实际上,整个`someMethod()`方法都是线程安全的,即使LocalObject实例作为参数传给本身对象或其他对象的方法,它都是线程安全的。唯一意外的情况就是:当localObject传递给其他的方法,而这些方法是可以被多线程访问的,则会导致线程安全问题。

+

+

+##对象成员变量(Object Members)

+

+对象的成员变量跟随对象本身存储在Java共享堆中。因此,如果两个变量调用同一个对象的一个方法,而这个方法会对对象的成员变量进行修改,则这个方法是**线程不安全**的。如下面这个例子:

+

+

+```Java

+public class NotThreadSafe{

+ StringBuilder builder = new StringBuilder();

+

+ public add(String text){

+ this.builder.append(text);

+ }

+}

+```

+

+如果两个线程同时调用同一个NotThreadSafe实例的`add(String texty)`方法,则会导致竞态条件:

+

+```Java

+NotThreadSafe sharedInstance = new NotThreadSafe();

+

+new Thread(new MyRunnable(sharedInstance)).start();

+new Thread(new MyRunnable(sharedInstance)).start();

+

+public class MyRunnable implements Runnable{

+ NotThreadSafe instance = null;

+

+ public MyRunnable(NotThreadSafe instance){

+ this.instance = instance;

+ }

+

+ public void run(){

+ this.instance.add("some text");

+ }

+}

+```

+

+注意两个MyRunnable实例共享同一个NoThreadSafe实例。因此,当两个线程同时调用`add()` 方法时则会导致竞态条件。

+

+然而,如果两个线程同时调用不同NoThreadSafe实例的`add()`方法则不会导致竞态条件。如下面这个例子:

+

+

+```Java

+new Thread(new MyRunnable(new NotThreadSafe())).start();

+new Thread(new MyRunnable(new NotThreadSafe())).start();

+```

+

+现在两个线程都拥有各自的NoThreadSafe实例,它们调用`add()`方法时并不会互相干扰,所以并没有导致竞态条件。因此,即使一个对象不是线程安全的,它们也可以用在不会引起竞态条件的代码中。

+

+

+##The Thread Control Escape Rule(线程控制逃逸规则)

+

+如果想知道你的代码是否线程安全,可以使用以下规则:

+

+> 如果一个资源的创建和使用始终在同一个线程的控制下,并且从没有逃逸出这个线程的控制,则认为是线程安全的。

+

+

+>If a resource is created, used and disposed within the control of the same thread, and never escapes the control of this thread, the use of that resource is thread safe.

+

+Resources can be any shared resource like an object, array, file, database connection, socket etc. In Java you do not always explicitly dispose objects, so "disposed" means losing or null'ing the reference to the object.

+

+Even if the use of an object is thread safe, if that object points to a shared resource like a file or database, your application as a whole may not be thread safe. For instance, if thread 1 and thread 2 each create their own database connections, connection 1 and connection 2, the use of each connection itself is thread safe. But the use of the database the connections point to may not be thread safe. For example, if both threads execute code like this:

+

+```

+check if record X exists

+if not, insert record X

+```

+

+If two threads execute this simultanously, and the record X they are checking for happens to be the same record, there is a risk that both of the threads end up inserting it. This is how:

+

+```

+Thread 1 checks if record X exists. Result = no

+Thread 2 checks if record X exists. Result = no

+Thread 1 inserts record X

+Thread 2 inserts record X

+```

+

+This could also happen with threads operating on files or other shared resources. Therefore it is important to distinguish between whether an object controlled by a thread is the resource, or if it merely references the resource.

\ No newline at end of file

diff --git "a/Java-Concurrency/07.\347\272\277\347\250\213\345\256\211\345\205\250\345\222\214\344\270\215\345\217\230\346\200\247.md" "b/Java-Concurrency/07.\347\272\277\347\250\213\345\256\211\345\205\250\345\222\214\344\270\215\345\217\230\346\200\247.md"

new file mode 100644

index 0000000..1264207

--- /dev/null

+++ "b/Java-Concurrency/07.\347\272\277\347\250\213\345\256\211\345\205\250\345\222\214\344\270\215\345\217\230\346\200\247.md"

@@ -0,0 +1,73 @@

+#07.线程安全和不变性

+

+只有当多个线程访问共享资源,并且一个线程或多个线程对资源进行写操作时,才会发生竞态条件。如果多个线程同时只对共享资源进行读操作,则不会发生**竞态条件**。

+

+我们可以通过把共享对象设置为不可变以至于让线程不能对对象进行改动,从而保证了线程安全。如下面这个例子:

+

+```Java

+public class ImmutableValue{

+

+ private int value = 0;

+

+ public ImmutableValue(int value){

+ this.value = value;

+ }

+

+ public int getValue(){

+ return this.value;

+ }

+}

+```

+

+注意value的值是通过构造函数进行设置的,并且value没有提供setter方法,所以一旦ImmutableValue实例被创建后,value的值就不能进行更改了。可以通过`getValue()`获取value的值,但不能进行改动。

+

+

+如果想要对ImmutableValue对象进行操作,可以通过返回一个新的实例来完成。例如下面这个

+

+```Java

+public class ImmutableValue{

+

+ private int value = 0;

+

+ public ImmutableValue(int value){

+ this.value = value;

+ }

+

+ public int getValue(){

+ return this.value;

+ }

+

+ public ImmutableValue add(int valueToAdd){

+ return new ImmutableValue(this.value + valueToAdd);

+ }

+

+}

+```

+

+注意这里返回了一个新的ImmutableValue实例,而不是对value的值进行修改。

+

+##引用不是线程安全的!(The Reference is not Thread Safe!)

+

+有一点需要谨记:**即使一个对象是线程安全的不可变对象,指向这个对象的引用也可能不是线程安全的。**

+

+```Java

+public class Calculator{

+ private ImmutableValue currentValue = null;

+

+ public ImmutableValue getValue(){

+ return currentValue;

+ }

+

+ public void setValue(ImmutableValue newValue){

+ this.currentValue = newValue;

+ }

+

+ public void add(int newValue){

+ this.currentValue = this.currentValue.add(newValue);

+ }

+}

+```

+

+The Calculator class holds a reference to an ImmutableValue instance. Notice how it is possible to change that reference through both the setValue() and add() methods. Therefore, even if the Calculator class uses an immutable object internally, it is not itself immutable, and therefore not thread safe. In other words: The ImmutableValue class is thread safe, but the use of it is not. This is something to keep in mind when trying to achieve thread safety through immutability.

+

+To make the Calculator class thread safe you could have declared the getValue(), setValue(), and add() methods synchronized. That would have done the trick.

diff --git "a/Java-Concurrency/08.Java\345\220\214\346\255\245\345\235\227.md" "b/Java-Concurrency/08.Java\345\220\214\346\255\245\345\235\227.md"

new file mode 100644

index 0000000..9ab49f6

--- /dev/null

+++ "b/Java-Concurrency/08.Java\345\220\214\346\255\245\345\235\227.md"

@@ -0,0 +1,183 @@

+#08.Java同步块

+

+Java synchronized block(Java同步块)用来对方法或代码块进行标记,表明这个方法或代码块是同步的。Java同步块可以避免**竞态条件**。

+

+

+##synchronized关键字(The Java synchronized Keyword)

+

+Java中的同步块使用关键字**synchronized**进行标记**。同步块在Java中是同步在某个对象上。所有同步在一个对象上的同步块在同一时间只能被一个线程进入并执行里面的代码。**其他所有试图进入该对象同步块的线程将被阻塞,直到执行该同步块中的线程退出。

+

+**synchronized**关键字可以被用于标记以下四种不同类型的块:

+

+- 实例方法(Instance methods)

+- 静态方法(Static methods)

+- 实例方法中的代码块(Code blocks inside instance methods)

+- 静态方法中的代码块(Code blocks inside static methods)

+

+上述同步块都同步在不同对象上。实际需要那种同步块视具体情况而定。

+

+##同步实例方法(Synchronized Instance Methods)

+

+下面是一个同步的实例方法:

+

+```Java

+ public synchronized void add(int value){

+ this.count += value;

+ }

+```

+

+使用**synchronized**关键字对方法进行声明,告诉JVM这是一个同步的方法。

+

+Java中的**同步实例方法是基于这个方法所属的实例对象上进行同步的**。因此,每一个同步实例方法都是基于各自的实例对象进行同步的。同一时间,只有一个线程可以访问一个实例对象的同步实例方法。如果有多个实例存在,那么每个线程都可以同时访问各自不同实例对象的同步实例方法,一个实例对象对应一个线程。

+

+##同步静态方法(Synchronized Static Methods)

+

+静态方法的同步与实例方法一致,都是使用**synchronized**在方法放进行声明。

+

+```Java

+ public static synchronized void add(int value){

+ count += value;

+ }

+```

+

+同样的,这里的**synchronized**关键字用于告诉JVM这个静态方法是同步的。

+

+**同步静态方法是基于这个静态方法所属的类对象进行同步的**。由于在JVM中,每个类有且只有一个类对象,因此,在同一时间内,只有一个线程能够访问同一个类的同步静态方法。

+

+如果同步静态方法位于不同的类中,那么每个线程都可以访问各自对应的类的同步静态方法,一个线程对应一个类。

+

+##实例方法中的同步块(Synchronized Blocks in Instance Methods)

+

+有些时候,你并不需要同步整一个方法,而只需要同步这个方法下的一小部分代码块。你可以在方法里面使用同步代码块。

+

+下面这个例子就是在非同步方法里面使用了同步代码块:

+

+```Java

+ public void add(int value){

+

+ synchronized(this){

+ this.count += value;

+ }

+ }

+```

+

+这个例子里,用了Java的同步代码块来使代码进行同步,让这个方法像同步方法一样执行。

+

+注意在Java的同步代码块里,需要在括号里传递一个对象。这个例子中,这个对象是**this**,this指的是这个实例对象本身。在Java同步代码块括号中的对象称为**监听器对象**。意味着,这个同步块是基于这个监听器对象进行同步的。同步实例方法使用其所在实例对象作为监听器对象。

+

+**同一时间,只有一个线程能够访问基于同一个监听器对象的同步代码。**

+

+下面这个例子,两个同步代码都是基于同一个实例对象进行同步的:

+

+```Java

+ public class MyClass {

+

+ public synchronized void log1(String msg1, String msg2){

+ log.writeln(msg1);

+ log.writeln(msg2);

+ }

+

+

+ public void log2(String msg1, String msg2){

+ synchronized(this){

+ log.writeln(msg1);

+ log.writeln(msg2);

+ }

+ }

+ }

+```

+

+因此,在这个例子中,每次仅能有一个线程能够访问这两个同步代码的任意一个同步代码。

+

+如果第二个同步块是基于其他监听器对象,例如`synchronized (this.getClass()) {}`,则此时第一个和第二个同步代码的监听器对象分别为:当前实例对象和当前类对象。因此,这两个同步代码可以同时由不同的线程进行访问。

+

+##静态方法中的同步块(Synchronized Blocks in Static Methods)

+

+下面这个例子中,两个同步代码都是基于当前的类对象进行同步的:

+

+```Java

+ public class MyClass {

+

+ public static synchronized void log1(String msg1, String msg2){

+ log.writeln(msg1);

+ log.writeln(msg2);

+ }

+

+

+ public static void log2(String msg1, String msg2){

+ synchronized(MyClass.class){

+ log.writeln(msg1);

+ log.writeln(msg2);

+ }

+ }

+ }

+```

+

+同一时间,只有一个线程能够访问这两个同步代码的任意一个同步代码。

+

+如果第二个方法的监听器对象非MyClass.class对象,则两个同步代码可以同时被两个线程访问。

+

+

+##Java同步示例(Java Synchronized Example)

+

+Here is an example that starts 2 threads and have both of them call the add method on the same instance of Counter. Only one thread at a time will be able to call the add method on the same instance, because the method is synchronized on the instance it belongs to.

+

+```Java

+public class Counter{

+ long count = 0;

+

+ public synchronized void add(long value){

+ this.count += value;

+ }

+}

+public class CounterThread extends Thread{

+ protected Counter counter = null;

+

+ public CounterThread(Counter counter){

+ this.counter = counter;

+ }

+

+ public void run() {

+for(int i=0; i<10; i++){

+ counter.add(i);

+ }

+ }

+}

+public class Example {

+

+ public static void main(String[] args){

+ Counter counter = new Counter();

+ Thread threadA = new CounterThread(counter);

+ Thread threadB = new CounterThread(counter);

+

+ threadA.start();

+ threadB.start();

+ }

+}

+```

+

+Two threads are created. The same Counter instance is passed to both of them in their constructor. The Counter.add() method is synchronized on the instance, because the add method is an instance method, and marked as synchronized. Therefore only one of the threads can call the add() method at a time. The other thread will wait until the first thread leaves the add() method, before it can execute the method itself.

+

+If the two threads had referenced two separate Counter instances, there would have been no problems calling the add() methods simultaneously. The calls would have been to different objects, so the methods called would also be synchronized on different objects (the object owning the method). Therefore the calls would not block. Here is how that could look:

+

+```Java

+public class Example {

+ public static void main(String[] args){

+ Counter counterA = new Counter();

+ Counter counterB = new Counter();

+ Thread threadA = new CounterThread(counterA);

+ Thread threadB = new CounterThread(counterB);

+

+ threadA.start();

+ threadB.start();

+ }

+}

+```

+

+Notice how the two threads, threadA and threadB, no longer reference the same counter instance. The add method of counterA and counterB are synchronized on their two owning instances. Calling add() on counterA will thus not block a call to add() on counterB.

+

+##Java Concurrency Utilities

+

+`synchronized`机制是Java第一个引进的用于同步多线程资源共享的机制。然而`synchroniez`机制并不高效。这就是为什么Java 5提供了一整套的并发工具类,以帮助开发人员实现更细粒度的并发控制

+。

+Status API Training Shop Blog About © 2014 GitHub, Inc. Terms Privacy Security Contact

diff --git "a/Java-Concurrency/09.Java\347\232\204volatile\345\205\263\351\224\256\345\255\227.md" "b/Java-Concurrency/09.Java\347\232\204volatile\345\205\263\351\224\256\345\255\227.md"

new file mode 100644

index 0000000..4cf1bfb

--- /dev/null

+++ "b/Java-Concurrency/09.Java\347\232\204volatile\345\205\263\351\224\256\345\255\227.md"

@@ -0,0 +1,90 @@

+#09.Java的volatile关键字

+

+未完,有疑惑,待续!

+

+Java的**volatile**关键字用来标识让变量**像存储在主内存中一样** (The Java volatile keyword is used to mark a Java variable as "being stored in main memory". )。更准确地说,被volatile声明的变量,它们的读操作都是从**主内存**中而不是**CPU缓存**中读取,同时,以及它们的写操作,都会同时写到**主内存**和**CPU缓存**中。

+

+实际上,从Java 5开始,volatile关键字不仅仅只是保证变量从主内存中读取和写入。我会在接下来的章节进行详细讲解。

+

+##volatile保证变量可见性(Java volatile Guarantees Variable Visibility)

+

+Java的volatile关键字保证了**线程间变量的可见性**。这听起来似乎很抽象, 让我来解释一下。

+

+在多线程的应用程序中,当多个线程对没有volatile关键字声明的变量进行操作时,基于性能考虑,每个线程都会从主内存中拷贝一份变量的值到CPU缓存里。如果你的计算器拥有多个CPU,那么每个线程都有可能使用不同的CPU运行。这意味着,每个线程都有可能拷贝一份数据到各自的CPU缓存中。这种情况如下图所示:

+

+

+

+没有使用volatile声明的变量,将不能保证**JVM何时从主内存中读取数据到CPU缓存,以及何时从CPU缓冲中读取数据到内存**。让我解释一下这样会发生什么状况:

+

+想象这样一个场景:多个线程访问同一个共享对象,这个对象包含一个计数器变量:

+

+```Java

+public class SharedObject {

+ public int counter = 0;

+}

+```

+

+线程1读取counter变量值0到CPU缓存,同时对该变量加1,但并不立即写回主内存。线程2同样读取主内存中的counter变量值0到自己的CPU缓存中,同样对这个值加1,也不立即写回主内存。线程1和线程2实际上并不是同步的(Thread 1 and Thread 2 are now practically out of sync. )。此时,counter的正确值应该为2,而线程1和线程2在CPU缓存的值却是1,而主内存中counter的值目前仍然是0。这种情况是十分混乱的,即使线程将CPU缓存的值写回住内存中,这个值也是错误的。

+

+如果使用**volatile**关键字声明counter变量,JVM将会保证每次读取counter的值都会从主内存中读取,每次对counter变量的修改都会立即写回到主内存。

+

+```Java

+public class SharedObject {

+ public volatile int counter = 0;

+}

+```

+

+在一些情况下,简单地用volatile声明变量,也许已经足够让多个线程每次读取的都是变量的最新值。

+

+然而,在另一些情况下,当两个线程都对变量读取和写入时,volatile并不足够。线程1读取counter的值0到CPU1的寄存器中,于此同时(或紧接着)线程2读取counter的值0到CPU2的寄存器中。两个线程都是直接从主内存中读取counter的值。然后,两个线程分别对counter的值加1,并写回主内存中。经过加1后,两个寄存器中的值都是1,并且把这个值写回主内存中。然而counter的正确值应该为2 。

+

+上述问题中,多个线程并没有读取到变量的最新值,是因为其他线程还没将寄存器的值写回主内存,这就是“** 可见性**”问题。一个线程的更新对其他线程不可见。

+

+In some cases simply declaring a variable volatile may be enough to assure that multiple threads accessing the variable see the latest written value. I will get back to which cases volatile is sufficient later.

+

+In the situation with the two threads reading and writing the same variable, simply declaring the variable volatile is not enough. Thread 1 may read the counter value 0 into a CPU register in CPU 1. At the same time (or right after) Thread 2 may read the counter value 0 into a CPU register in CPU 2. Both threads have read the value directly from main memory. Now both variables increase the value and writes the value back to main memory. They both increment their register version of counter to 1, and both write the value 1 back to main memory. The value should have been 2 after two increments.

+

+The problem with multiple threads that do not see the latest value of a variable because that value has not yet been written back to main memory by another thread, is called a "visibility" problem. The updates of one thread are not visible to other threads.

+

+##valatile的保证(The Java volatile Guarantee)

+

+从Java 5之后,volatile关键字不仅仅只是保证每次都是从主内存中读取和写入数据。实际上volatile关键字保证:

+

+> If Thread A writes to a volatile variable and Thread B subsequently reads the same volatile variable, then all variables visible to Thread A before writing the volatile variable, will also be visible to Thread B.

+